This study explores how organisations working in domestic abuse, intimate partner violence (IPV), and stalking support are responding to the growing presence of artificial intelligence (AI) technologies — particularly chatbots and automated agents — in frontline services. The research is conducted in collaboration with Google’s At-Risk Research Team, bringing together academic expertise and industry insights to explore this important issue.

In recent years, AI tools have rapidly entered public service spaces, including healthcare and social care. Many of these systems rely on machine learning — a type of AI that identifies patterns in large datasets to generate predictions or responses. This can make them seem intelligent or human-like, especially in tools like chatbots. But it’s important to remember that these systems don’t understand language or emotion in the way people do — they generate text based on probability, not empathy or awareness.

While AI-powered chatbots can offer some promising benefits — such as providing 24/7 access to information, supporting overstretched staff, or extending reach to underserved groups — their use in safety-critical settings also raises serious concerns. These systems can behave unpredictably, provide incorrect or misleading advice, and may even foster emotional dependence in vulnerable users. And in sensitive environments like domestic abuse services, the risks of misinformation or unintended harm are especially high.

This project aims to better understand how support organisations are thinking about these technologies: how they’re being introduced, what ethical and practical concerns are being raised, and why some services are choosing to reject or avoid them entirely. Our focus is not just on the tools themselves, but on the decision-making processes that shape whether — and how — they’re used.

We’re particularly interested in “AI-powered chatbots” and “AI agents.” These are digital systems that simulate conversation with users, often appearing as pop-up chat windows on websites or messaging apps. Some follow simple scripts, but others use machine learning to respond in real time. The more advanced systems — sometimes referred to as “AI agents” — can take on more autonomous tasks or decisions. While these may offer flexibility, they also introduce new layers of complexity when it comes to safety, trust, and control.

Despite their increasing visibility, very little research has examined how domestic abuse and IPV support services are actually engaging with these tools — or what factors are shaping their decisions.

This study aims to fill that gap, and we invite you to participate!

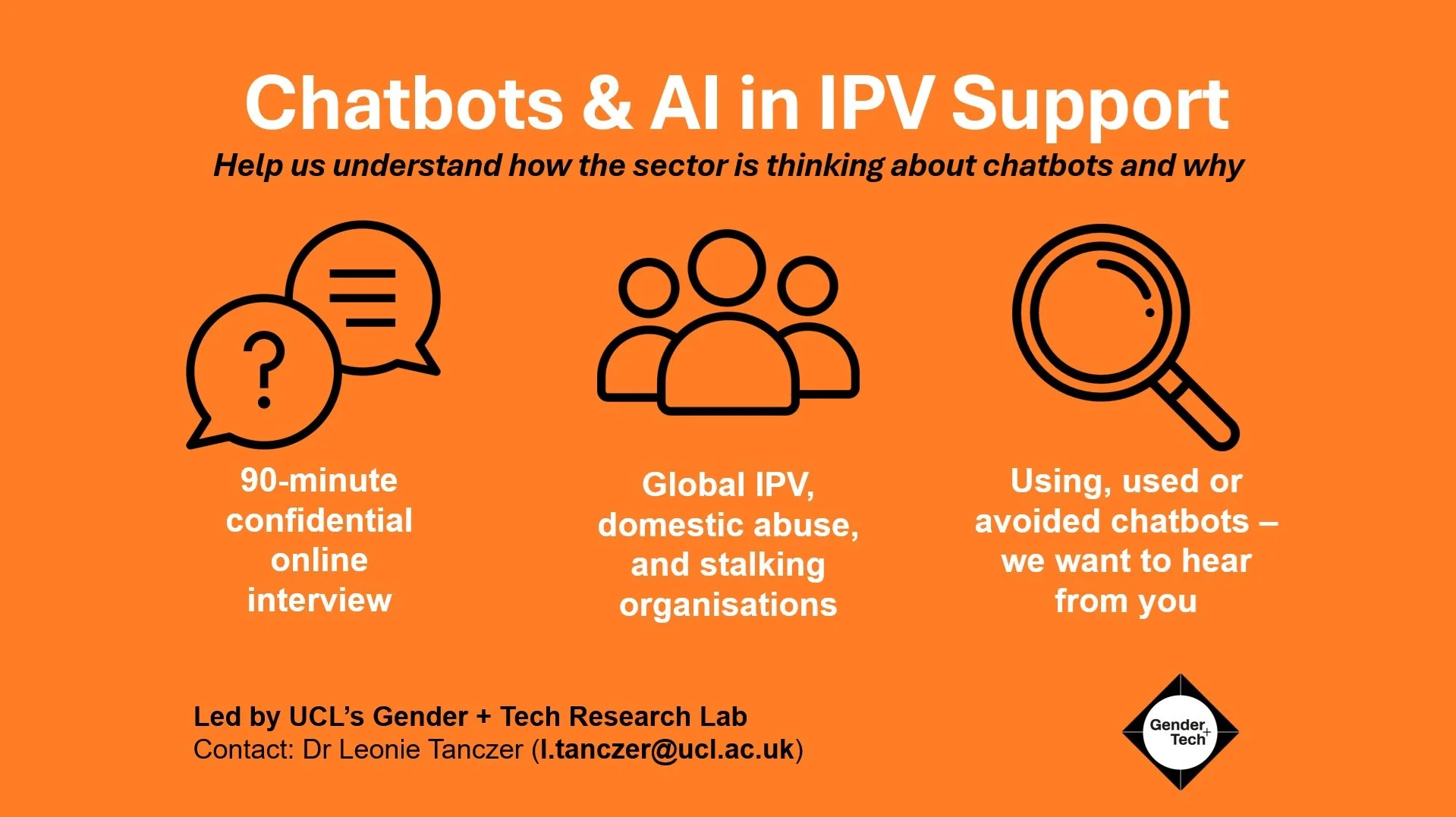

We are currently conducting confidential interviews with professionals in the domestic abuse and IPV sector to explore the opportunities and risks associated with chatbot and AI agent use. We’re keen to speak with a wide range of organisations: those who are actively using chatbots, those who have used them in the past, and those who have chosen not to adopt them.

You don’t need to be an expert in AI, tech-savvy, or currently using these tools to participate. In fact, we’re especially interested in your concerns, questions, and reflections — regardless of whether your organisation has used a chatbot before. We even have a briefing document as we want to ensure everyone has a shared understanding of the key terms we’ll be using.

Participation involves a 90-minute online interview at a time that suits you. We welcome staff from all roles, whether you’re making decisions about digital tools, working on the frontline, or supporting survivors in any capacity. All interviews are fully confidential and ethically approved.

If you're interested in taking part or would like to learn more, please get in touch with the study lead Dr Leonie Tanczer and Research Assistant Maya Ashkenazi (l.tanczer@ucl.ac.uk).

Feel free to share this invitation with others in your network — especially those working in domestic abuse, IPV, and stalking support roles — who may want to contribute.

Subscribe

Sign up to our monthly newsletter to receive news and updates.

We respect your privacy.